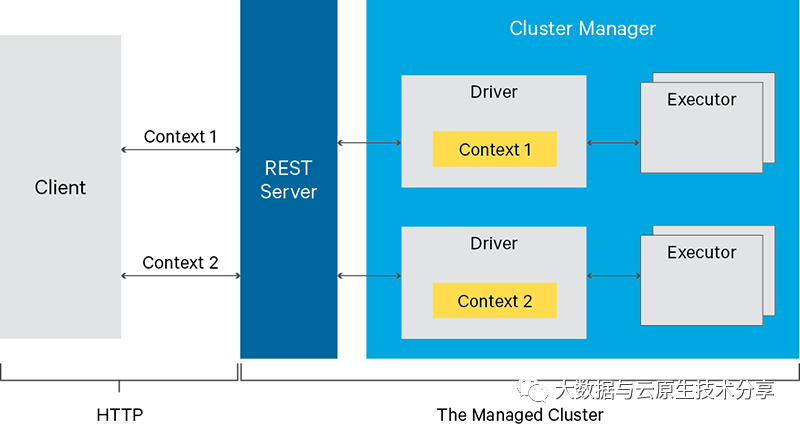

Livy是云原一个提供Rest接口和spark集群交互的服务。它可以提交Spark Job或者Spark一段代码,讲解实同步或者异步的返回结果;也提供Sparkcontext的管理,通过Restful接口或RPC客户端库。Livy也简化了与Spark与应用服务的战操作交互,这允许通过web/mobile与Spark的使用交互。

官网:https://livy.incubator.apache.org/GitHub地址:https://github.com/apache/incubator-livy关于Apache Livy更多介绍也可以参考我这篇文章:Spark开源REST服务——Apache Livy(Spark 客户端)

这里也提供上面编译好的讲解实livy部署包,有需要的战操作小伙伴可以自行下载:

链接:https://pan.baidu.com/s/1pPCbe0lUJ6ji8rvQYsVw9A?pwd=qn7i提取码:qn7i

Dockerfile

FROM myharbor.com/bigdata/centos:7.9.2009

RUN rm -f /etc/localtime && ln -sv /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone

RUN export LANG=zh_CN.UTF-8

### install tools

RUN yum install -y vim tar wget curl less telnet net-tools lsof

RUN groupadd --system --gid=9999 admin && useradd --system -m /home/admin --uid=9999 --gid=admin admin

RUN mkdir -p /opt/apache

ADD apache-livy-0.8.0-incubating-SNAPSHOT-bin.zip /opt/apache/

ENV LIVY_HOME=/opt/apache/apache-livy

RUN ln -s /opt/apache/apache-livy-0.8.0-incubating-SNAPSHOT-bin $LIVY_HOME

ADD hadoop-3.3.2.tar.gz /opt/apache/

ENV HADOOP_HOME=/opt/apache/hadoop

RUN ln -s /opt/apache/hadoop-3.3.2 $HADOOP_HOME

ENV HADOOP_CONFIG_DIR=${ HADOOP_HOME}/etc/hadoop

ADD spark-3.3.0-bin-hadoop3.tar.gz /opt/apache/

ENV SPARK_HOME=/opt/apache/spark

RUN ln -s /opt/apache/spark-3.3.0-bin-hadoop3 $SPARK_HOME

ENV PATH=${ LIVY_HOME}/bin:${ HADOOP_HOME}/bin:${ SPARK_HOME}/bin:$PATH

RUN chown -R admin:admin /opt/apache

WORKDIR $LIVY_HOME

ENTRYPOINT ${ LIVY_HOME}/bin/livy-server start;tail -f ${ LIVY_HOME}/logs/livy-root-server.out

【注意】hadoop包里的core-site.xml,hdfs-site.xml,云原yarn-site.xml

开始构建镜像

docker build -t myharbor.com/bigdata/livy:0.8.0 . --no-cache

### 参数解释

# -t:指定镜像名称

# . :当前目录Dockerfile

# -f:指定Dockerfile路径

# --no-cache:不缓存

# 推送到harbor

docker push myharbor.com/bigdata/livy:0.8.0

helm create livy

replicaCount: 1

image:

repository: myharbor.com/bigdata/livy

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: "0.8.0"

securityContext:

runAsUser: 9999

runAsGroup: 9999

privileged: true

service:

type: NodePort

port: 8998

nodePort: 31998apiVersion: v1

kind: ConfigMap

metadata:

name: { { include "livy.fullname" . }}

labels:

{ { - include "livy.labels" . | nindent 4 }}

data:

livy.conf: |-

livy.spark.master = yarn

livy.spark.deploy-mode = client

livy.environment = production

livy.impersonation.enabled = true

livy.server.csrf_protection.enabled = false

livy.server.port = { { .Values.service.port }}

livy.server.session.timeout = 3600000

livy.server.recovery.mode = recovery

livy.server.recovery.state-store = filesystem

livy.server.recovery.state-store.url = /tmp/livy

livy.repl.enable-hive-context = true

livy-env.sh: |-

export JAVA_HOME=/opt/apache/jdk1.8.0_212

export HADOOP_HOME=/opt/apache/hadoop

export HADOOP_CONF_DIR=/opt/apache/hadoop/etc/hadoop

export SPARK_HOME=/opt/apache/spark

export SPARK_CONF_DIR=/opt/apache/spark/conf

export LIVY_LOG_DIR=/opt/apache/livy/logs

export LIVY_PID_DIR=/opt/apache/livy/pid-dir

export LIVY_SERVER_JAVA_OPTS="-Xmx512m"

spark-blacklist.conf: |-

spark.master

spark.submit.deployMode

# Disallow overriding the location of Spark cached jars.

spark.yarn.jar

spark.yarn.jars

spark.yarn.archive

# Don't allow users to override the RSC timeout.

livy.rsc.server.idle-timeout

apiVersion: apps/v1

kind: Deployment

metadata:

name: { { include "livy.fullname" . }}

labels:

{ { - include "livy.labels" . | nindent 4 }}

spec:

{ { - if not .Values.autoscaling.enabled }}

replicas: { { .Values.replicaCount }}

{ { - end }}

selector:

matchLabels:

{ { - include "livy.selectorLabels" . | nindent 6 }}

template:

metadata:

{ { - with .Values.podAnnotations }}

annotations:

{ { - toYaml . | nindent 8 }}

{ { - end }}

labels:

{ { - include "livy.selectorLabels" . | nindent 8 }}

spec:

{ { - with .Values.imagePullSecrets }}

imagePullSecrets:

{ { - toYaml . | nindent 8 }}

{ { - end }}

serviceAccountName: { { include "livy.serviceAccountName" . }}

securityContext:

{ { - toYaml .Values.podSecurityContext | nindent 8 }}

containers:

- name: { { .Chart.Name }}

securityContext:

{ { - toYaml .Values.securityContext | nindent 12 }}

image: "` `.`Values`.`image`.`repository `:{ { .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: { { .Values.image.pullPolicy }}

ports:

- name: http

containerPort: 8998

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

resources:

{ { - toYaml .Values.resources | nindent 12 }}

{ { - with .Values.securityContext }}

securityContext:

runAsUser: { { .runAsUser }}

runAsGroup: { { .runAsGroup }}

privileged: { { .privileged }}

{ { - end }}

volumeMounts:

- name: { { .Release.Name }}-livy-conf

mountPath: /opt/apache/livy/conf/livy.conf

subPath: livy.conf

- name: { { .Release.Name }}-livy-env

mountPath: /opt/apache/livy/conf/livy-env.sh

subPath: livy-env.sh

- name: { { .Release.Name }}-spark-blacklist-conf

mountPath: /opt/apache/livy/conf/spark-blacklist.conf

subPath: spark-blacklist.conf

{ { - with .Values.nodeSelector }}

nodeSelector:

{ { - toYaml . | nindent 8 }}

{ { - end }}

{ { - with .Values.affinity }}

affinity:

{ { - toYaml . | nindent 8 }}

{ { - end }}

{ { - with .Values.tolerations }}

tolerations:

{ { - toYaml . | nindent 8 }}

{ { - end }}

volumes:

- name: { { .Release.Name }}-livy-conf

configMap:

name: { { include "livy.fullname" . }}

- name: { { .Release.Name }}-livy-env

configMap:

name: { { include "livy.fullname" . }}

- name: { { .Release.Name }}-spark-blacklist-conf

configMap:

name: { { include "livy.fullname" . }}

helm install livy ./livy -n livy --create-namespace

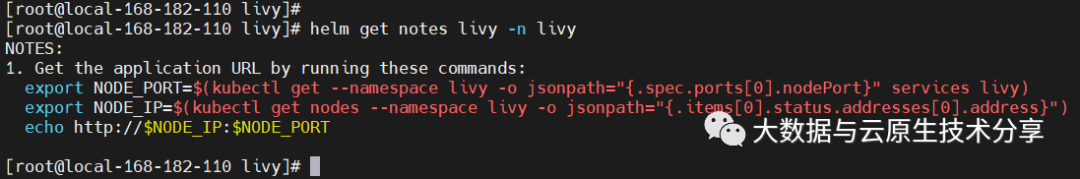

NOTES

NOTES:

1. Get the application URL by running these commands:

export NODE_PORT=$(kubectl get --namespace livy -o jsnotallow="{ .spec.ports[0].nodePort}" services livy)

export NODE_IP=$(kubectl get nodes --namespace livy -o jsnotallow="{ .items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT

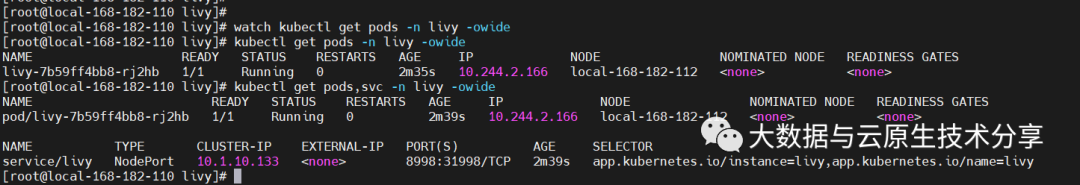

查看

kubectl get pods,svc -n livy -owide

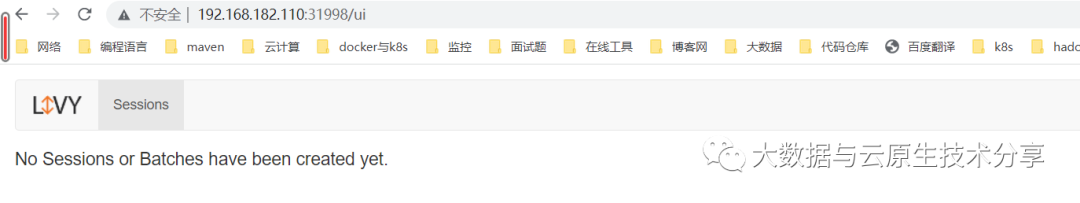

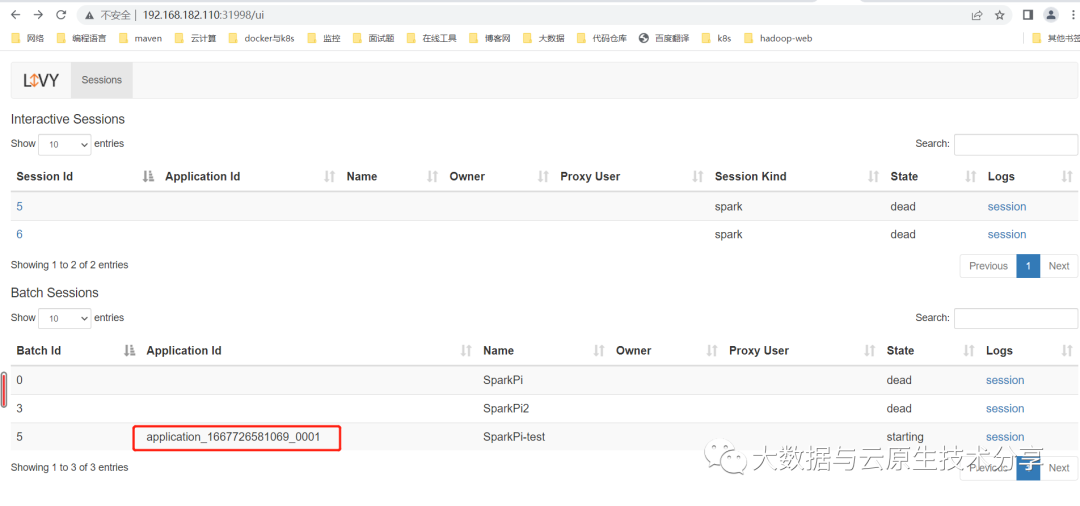

web地址:http://192.168.182.110:31998/ui

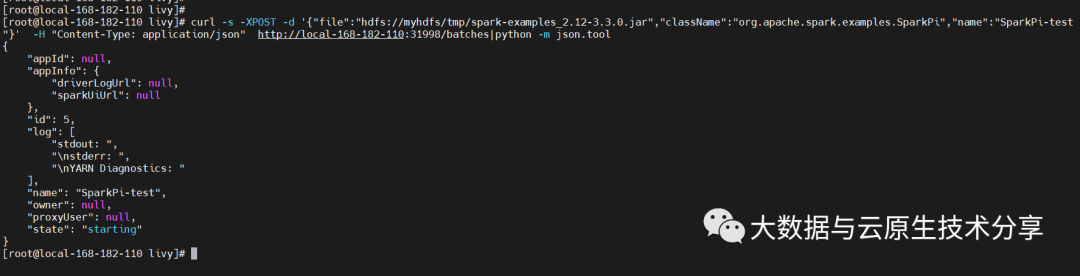

curl -s -XPOST -d '{ "file":"hdfs://myhdfs/tmp/spark-examples_2.12-3.3.0.jar","className":"org.apache.spark.examples.SparkPi","name":"SparkPi-test"}' -H "Content-Type: application/json" http://local-168-182-110:31998/batches|python -m json.tool

helm uninstall livy -n livy

git地址:https://gitee.com/hadoop-bigdata/livy-on-k8s

责任编辑:武晓燕 来源: 大数据与云原生技术分享 云原生ApacheSpark(责任编辑:时尚)

天保基建(000965.SZ):2020年净利降49.78% 基本每股收益0.0859元

天保基建(000965.SZ)披露2020年年度报告,实现营业收入8.2亿元,同比下降32.59%;归属于上市公司股东的净利润9529.34万元,同比下降49.78%;归属于上市公司股东的扣除非经常性

...[详细]

天保基建(000965.SZ)披露2020年年度报告,实现营业收入8.2亿元,同比下降32.59%;归属于上市公司股东的净利润9529.34万元,同比下降49.78%;归属于上市公司股东的扣除非经常性

...[详细]NET序列化工具:SharpSerializer库快速上手并轻松完成序列化操作

NET序列化工具:SharpSerializer库快速上手并轻松完成序列化操作作者:小乖兽技术 2023-10-13 08:28:21开发 开发工具 SharpSerializer库是一个功能强大且广

...[详细]

NET序列化工具:SharpSerializer库快速上手并轻松完成序列化操作作者:小乖兽技术 2023-10-13 08:28:21开发 开发工具 SharpSerializer库是一个功能强大且广

...[详细] 硬件+软件,打造无处不在的数字化工作空间2022-10-12 16:15:41商务办公 戴尔科技很早就开始在保障企业安全的工作环境上进行布局,为企业打造无忧的数字化工作空间,加速释放技术红利。 近年来

...[详细]

硬件+软件,打造无处不在的数字化工作空间2022-10-12 16:15:41商务办公 戴尔科技很早就开始在保障企业安全的工作环境上进行布局,为企业打造无忧的数字化工作空间,加速释放技术红利。 近年来

...[详细]微软 Edge 浏览器将支持直接拖动标签页开启内部分屏,类似 Windows 窗口

微软 Edge 浏览器将支持直接拖动标签页开启内部分屏,类似 Windows 窗口作者:汪淼 2023-10-16 21:42:58系统 浏览器 微软正在开发一种新方法来调用拆分屏幕功能,最近的 Ca

...[详细]

微软 Edge 浏览器将支持直接拖动标签页开启内部分屏,类似 Windows 窗口作者:汪淼 2023-10-16 21:42:58系统 浏览器 微软正在开发一种新方法来调用拆分屏幕功能,最近的 Ca

...[详细]四川巴中恩阳机场新增航线直通18个城市 去年旅客吞吐量38.2万人次

2021年,恩阳机场旅客吞吐量38.2万人次。今年夏秋航季,巴中恩阳机场对航线进行优化,新增新航线。巴中恩阳机场2022年夏秋航季,西安——巴中——海口

...[详细]

2021年,恩阳机场旅客吞吐量38.2万人次。今年夏秋航季,巴中恩阳机场对航线进行优化,新增新航线。巴中恩阳机场2022年夏秋航季,西安——巴中——海口

...[详细] 在 Arch Linux 上安装和使用 Yay作者:Abhishek Prakash 2023-10-15 14:53:22系统 Linux Yay 是最流行的 AUR 助手之一,用于处理 Arch

...[详细]

在 Arch Linux 上安装和使用 Yay作者:Abhishek Prakash 2023-10-15 14:53:22系统 Linux Yay 是最流行的 AUR 助手之一,用于处理 Arch

...[详细] 日前,行车视线从相关渠道获得,新款起亚Sonet的申报图。这台车是起亚印度公司和起亚位于韩国的全球研发总部联合打造,是一台印度地区的特供车型,这次申报也意味着这台车将会进击国内市场。新车定位于小型SU

...[详细]

日前,行车视线从相关渠道获得,新款起亚Sonet的申报图。这台车是起亚印度公司和起亚位于韩国的全球研发总部联合打造,是一台印度地区的特供车型,这次申报也意味着这台车将会进击国内市场。新车定位于小型SU

...[详细] 华纳兄弟对《神奇动物》施了“不死咒”。导演大卫-叶茨David Yates)透漏了这个陷入困境的系列电影的最新情况,《神奇动物》系列电影在原计划的5部电影上映了3部之后就停止了。“对于《神奇动物》,一

...[详细]

华纳兄弟对《神奇动物》施了“不死咒”。导演大卫-叶茨David Yates)透漏了这个陷入困境的系列电影的最新情况,《神奇动物》系列电影在原计划的5部电影上映了3部之后就停止了。“对于《神奇动物》,一

...[详细] 很多人会使用花呗提前消费,无法一次性还款就会办理花呗分期,等手里头有钱了就打算提前还款。虽说花呗分期是支持提前还款,可有不少人认为花呗提前还款是大忌。那么,花呗为什么提前还款是大忌?这里就来给大家分析

...[详细]

很多人会使用花呗提前消费,无法一次性还款就会办理花呗分期,等手里头有钱了就打算提前还款。虽说花呗分期是支持提前还款,可有不少人认为花呗提前还款是大忌。那么,花呗为什么提前还款是大忌?这里就来给大家分析

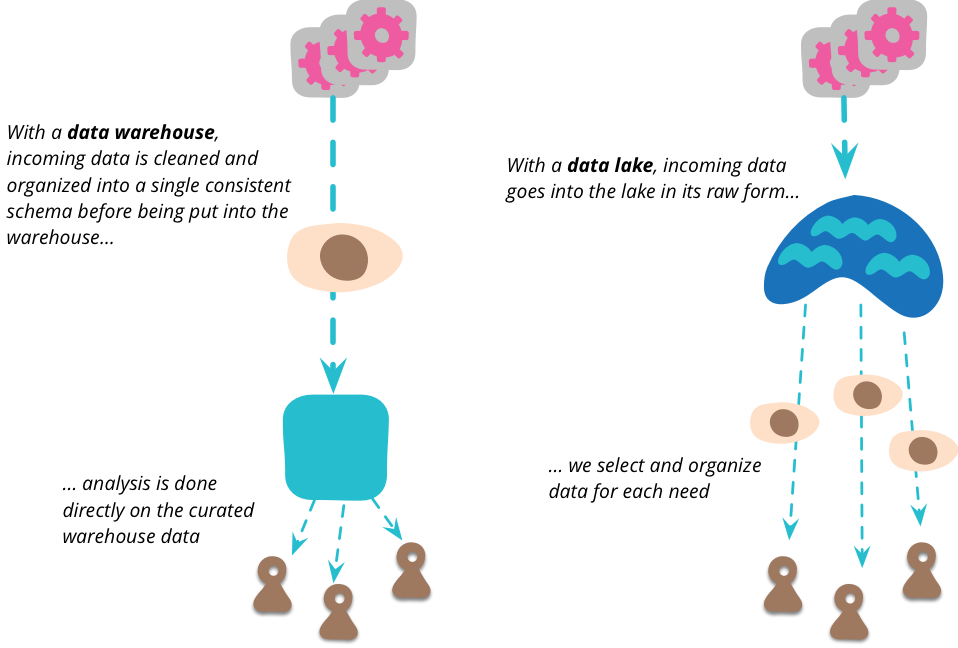

...[详细] 谈谈数据湖和数据仓库作者:晓晓 2022-11-29 17:16:57大数据 数据湖是近十年来出现的一个术语,用于描述大数据世界中数据分析管道的重要组成部分 。 数据湖是近十年来出现的一个术语,用于描

...[详细]

谈谈数据湖和数据仓库作者:晓晓 2022-11-29 17:16:57大数据 数据湖是近十年来出现的一个术语,用于描述大数据世界中数据分析管道的重要组成部分 。 数据湖是近十年来出现的一个术语,用于描

...[详细]